Understanding survey bots and tools for data validation: Strategies for identifying possibly fraudulent responses

If you plan to have an open research survey that you distribute through email lists or social media, especially if you indicate that you will be compensating your survey subjects, you should expect to have your survey completed by “bots” and should plan accordingly. This post will try to address the specific scope of the problem and provide suggestions for how to make your survey less likely to be targeted by “bot” authors and other bad actors. It will also offer suggestions for how to add fields to your survey to help identify invalid records in your data.

The term “bot” in the context of online surveys refers to a script or program that is written to fill in the fields of a survey with fake values and then submit the survey, repeating the process many times, with the goal of receiving the promised compensation, also multiple times. Bots like this have been a problem for a long time, not just in academic research, but with any web-based form submission with potential reward, including consumer surveys. There has long been a need to either filter or authenticate responses to get valid data from online data collection tools.

It is important to be clear that this doesn’t happen with every online survey or form. It is a problem that happens specifically to forms and surveys that anyone can fill out if they have the web page link, and when there is a reward of some kind (e.g., gift cards, online game credits, or access to social media sites).

Suggestion for Avoiding Bots in the First Place

Avoid open surveys that offer payment for participation. Instead, pre-screen potential subjects to collect and validate contact information such as emails or phone numbers, and then send unique links to those subjects. If you cannot collect contact information, for example because your survey is fully anonymous, and you are providing compensation in the form of gift card code that can be redeemed externally to the survey (e.g., Amazon gift cards), then providing some means to delay compensation – in the form of a link that won’t be activated immediately – may be your best option. Using fields to identify fraudulent records, such as the ones described below, will give you time to identify fraudulent records, so that you only compensate valid participants.

Fields for Identifying Fraudulent Records

The following are great tools you can add to any data collection survey. While these are presented in the context of identifying survey “bots”, many are useful for validating any raw data collected online.

- Timestamps

- Branching logic and “impossible” fields

- Attention items

- Repeated items

- Open ended questions

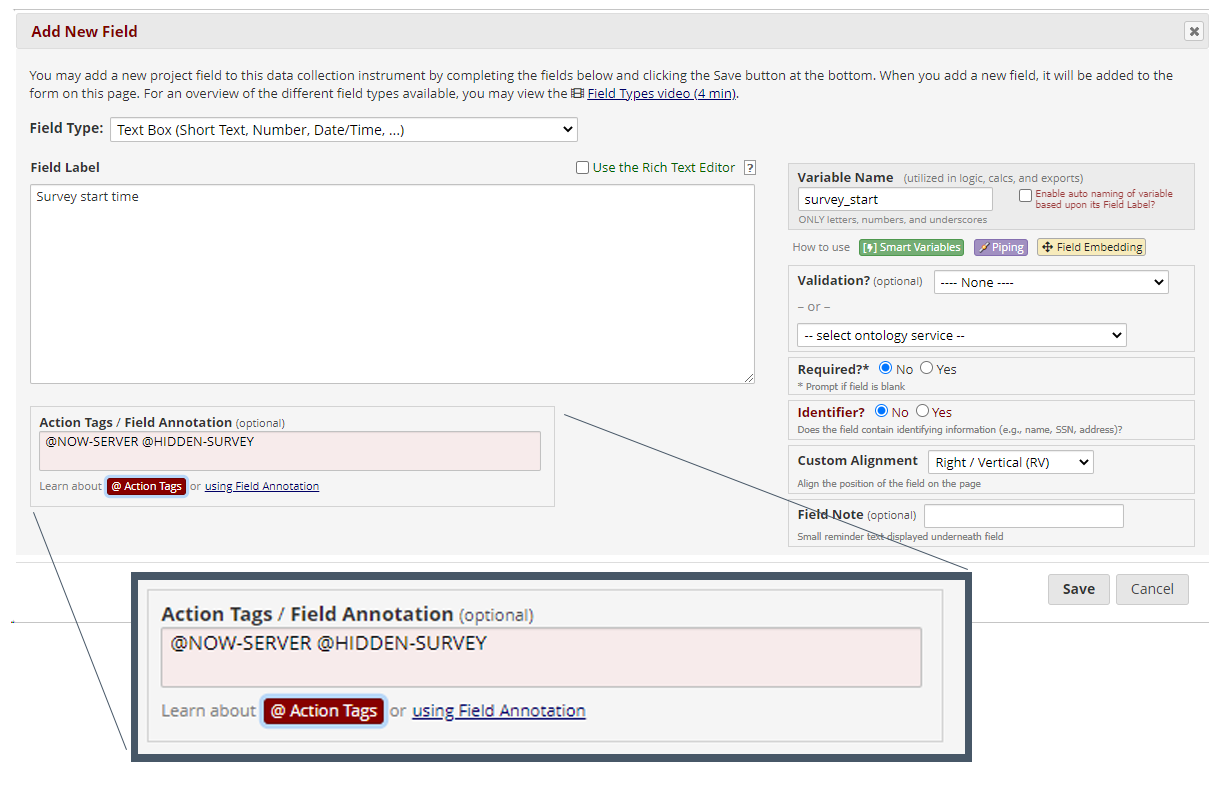

Timestamps: “Timestamps” are fields in your data that automatically populate with the date and time of specific events that happen while someone is completing a survey. You can explicitly include timestamps in the data for both when the survey was started and when it was completed. Depending on the survey software you use, the survey may already create one or more timestamps: test your survey to see what values are included in the completion data. If, as with REDCap surveys, only the survey completion time is captured, you can add a hidden field that captures the start time of the survey. In REDCap, you can add a text field with two Action Tags: @HIDDEN (or @HIDDEN-SURVEY) and @NOW-SERVER, the time as calculated by the web server.

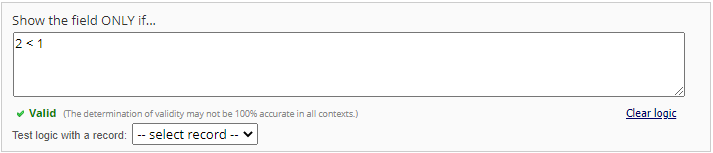

Branching logic and “impossible” fields: The process for building a “bot” script requires a programmer to look at the source code of the survey’s web page and interpret or reverse engineer the logic of the fields. As you develop your survey, you can add logic that creates survey fields that are “impossible” to reach, but which will likely trick a programmer scripting a “bot” to complete the survey. For example, if you have a multiple-choice question asking for your subjects’ favorite color, where the person filling out the survey can only select one answer, and then a following question that is only displayed if the subject previously chose both “red” and “yellow”, that question will never be reached. Specifically, it can’t be reached by a human filling out the survey normally. However, someone writing the script that completes the survey automatically may not realize that, and may script a response to that question, immediately flagging the record as one not completed by human action.

This effect can also be achieved by simple logic statement that always evaluates as “false”:

Attention items: “Attention questions” are designed to see if a person completing a long survey is really reading the questions, and not just mindlessly responding. It may take the form of a question that starts normally, but then directs the subject to make a specific choice, ignoring the rest of the question. Or it could be a question asking a preference for your favorite color, but then only list options that aren’t colors, like “a skateboard”, “careers”, “Pokemon”, or “none of the above”. Another suggestion would be to include an absurd or impossible attention-type question in a series of items asking the subject to rank agreement or disagreement with various statements. These types of questions scattered throughout a long survey could easily be missed by someone scripting a bot, or by an inattentive person, and can help identify likely invalid responses.

Repeated items: Repeating one or more questions in a survey, just as with attention items, can help identify both fraudulent human responses as well as scripted responses to a survey. Particularly in the case of demographic items, it can be effective to repeat the request for a specific piece of personal information, such as a zip code, both at the beginning and the end of a survey. If the responses don’t match, then there may be reason to question the validity of the survey.

Open-ended questions: Asking survey subjects to provide responses to open-ended questions is another tool to validate survey responses. Generating meaningful, unique responses to open-ended questions is something that earnest survey respondents are far better at than fraudulent respondents and scripts. You can improve the effectiveness of open-ended questions by being very specific in your questions (“What is your favorite weekend mid-day meal?” instead of “What is your favorite meal?”). You should also try to tailor your questions to your subjects’ experience and knowledge, which is also likely to trip up fraudulent and scripted survey responses.

Validating your data

Once you’ve added one or more these fields, you can use them to interpret your data. For each field, you need to know what represents both valid and invalid values. You can then “score” or quantify the validity of each record.

How branching logic can help identify questionable records: Generally, when reviewing any data collected by a survey with branching logic, it’s good to check that there aren’t data in fields that branching logic should not have shown to the survey participants.

How timestamps can help identify questionable records: It is important to understand the time between when a survey was opened and when the survey was submitted. Even the fastest human cannot fill out a survey as fast as a scripted program can. Completion times that are only seconds after start times are an extremely good indicator that the response was automated.

Additionally, someone using a “bot” can complete many surveys in a very short time span. It’s important to compare both the timestamps of individual records, as well as timestamps between responses. A group of survey records that were all completed at nearly the same time may be the work of someone running a “bot”. It’s likely such records will seem to be similar in other ways, and along with other validation fields, may be easily marked as “questionable” using validity scores and timestamps.

Work with the Institutional Review Board (IRB)

If you are using surveys for human subjects research, you are already required to have your survey reviewed by the IRB. As part of that review, you identify who is eligible to participate in your research, and to ensure that you are recruiting your participants in a fair manner. Work with the IRB to clearly define the subject pool that will support your research, providing those definitions in the consent, and including those definitions as questions in the survey. Being precise with these definitions will doubly serve to comply with the IRB and to help you exclude ineligible responses from the collected data.

For example, you can limit your eligible participants by age or geography. Including repeated questions and branching logic questions about these facts will support the goal of protecting your research subjects, as well as helping with data validation.

Conclusion

While the ever-increasing prevalence of “bots” and fraudulent responses is concerning, especially in response to open surveys offering compensation, there are a variety of tools available when building surveys to identify and mitigate the problem. In the end, these tools can be effective in any data collection project where there may be questionable responses. They give you the means to confidently assess whether subject responses are likely to have been provided by a member of your target population, and thus that they will contribute meaningfully to your research.

Additional reading:

Ensuring survey research data integrity in the era of internet bots.

Bots started sabotaging my online research. I fought back.

Lisa Hallberg is a research engineer with the Life Span Institute's Research Design and Analysis team.